In the Part 1 of the Chatbot Testing blog, we introduced an overview on Chatbots and why it has become pervasive in our lives today. We also learned about the advantages and disadvantages of using a Chatbot in businesses.

Further, we learned about Chatbot testing via Intent Testing, BOT’s way of Introduction, Conversational Flow, Accuracy, Natural Language Processing Friendliness, Latency (Speed) of the BOT, among other aspects of testing a BOT.

In the Part 2 of the Chatbot testing blog, we will learn about testing areas like Intent Hopping, Validation of Input, Graphical Rendering of Content, Error Management (BOT’s ability to fail gracefully) and User Friendliness among other things.

Chatbot Testing

Let us understand the other features that a Chatbot should be tested.

-

- Chatbot should be able to easily hop from one intent to another:

Sometimes when the Chatbot expects a reply from the user and the user fails to satisfy the request and poses a different query to the BOT, then the BOT should be intelligent enough to understand that the user has a different intent and then hop on to this new intent and reply the user accordingly.For example, if the BOT wants to know from the user which of office’s location addresses the user wishes to know, the BOT may ask -: “We have offices in Gurgaon, Pune, Mumbai, Kolkata, Bengaluru and Hyderabad, which location’s address do you wish to know?” Instead of answering this question with a location name, if the user asks– “What is the leave policy at the company?”, then the BOT may not be able to understand that a different intent has been addressed by the user and would continue to pose the same question regarding the location name for the address.

To test this, the tester has to purposefully pose such questions in succession and ensure that the BOT is able to switch between intents seamlessly and not get caught in the context of the same intent. - Spelling and grammatical errors to be avoided in response

The tester must ensure that there are no spelling or grammatical errors in the response. All the sentences should be in case sensitive and should end with a full stop. Wherever lists are displayed, they should be aptly formatted either using bullets or numbers. - BOT’s ability to validate inputs like email addresses, zip codes and mobile numbers:

Scenario 1: It is often found that in situations where the BOT accepts input like email addresses, even invalid email addresses get accepted by the BOT. It is better to implement a Regex for validating email address formats and the tester should rigorously test the BOT against invalid email address formats. Common tests would be if the Chatbot accepts an email address with a space in it or which does not have an at sign (@) special symbol in it.

Scenario 2:If the BOT is intended to accept Indian zip code values, it may happen that the BOT accepts a zip code like 123456 thinking that it is 6 digits. The tester should ensure that only valid 6-digit Indian zip codes are being accepted by the BOT.

Scenario 3: If the BOT has to accept an Indian mobile number, then it has to validate that it is a 10-digit value and does not contain characters other than numbers in it.

Scenario 4: While testing BOTs that recognize voice inputs, it was found that even if the user voiced the email address as roger@gmail.com, the BOT would interpret it as “roger @ gmail.com” .i.e. the BOT while converting the voice input to text, would introduce a space before and after the “@” character. This would happen no matter how precisely and with the right speed the email address was spoken. So, in such situations, the tester must test the BOT by providing various alterations to the email address construct while voicing it and monitor the converted output text.

Scenario 5: In situations where validations need to be done on inputs, those validations should happen immediately after the value was entered and not at the end of the conversation. If the input was indeed invalid, the BOT should give the user the option to reenter the value and feed it again to the BOT.

Scenario 6: The BOT may expect an amount of value to be entered followed by another amount value lesser than the first. So, in such situations, the tester should purposefully enter values that do not conform to the above requirement and ensure that with such inputs the BOT warns the user against usage of such inputs. - BOT’s ability to render graphical content without distortion:

Very often as a part of the response, the BOT would be implemented to show graphical content like cards or tiles with information embedded in them or at other times images or suggestion chips having some description embedded in them.The tester has to ensure that across all popular browsers like “Chrome”, “Firefox” and “Internet Explorer”, this graphical content appear undistorted and also fit well within the BOT’s window. It should also be checked whether descriptions shown in the suggestion chips or tiles are not overflowing out of the boundary of that graphical entity.The tester should deliberately resize the browser to a smaller size and observe whether the same graphical content appears undistorted and identical to it is as when viewed in the browser’s maximized window.In this resized mode, the tester should purposefully fire queries which render a response containing graphical content like images or tiles and see whether the same appears as in the maximized mode. Also, subsequently on maximizing the browser window, there should be no change in the appearance of the graphical content.All images shown should appear clear, undistorted and must fit well within the BOT’s boundary. - Setting the text length of the queries:

There should be a limit to the length of the query that the BOT can accept. This is because in case of a large query the BOT may go into a process of retrieving a response and not stop in its quest or it may even crash because of the inability to handle large queries. Sometimes the BOT may even behave erratically in such scenarios and respond with an unfriendly response. - BOT should fail gracefully if the situation so demands:

Many times in response to some queries which the BOT fails to understand, the BOT would simply reply by saying that there was some internal server error or problem in retrieving the response.In all situations where the BOT fails to understand the intent behind the query or simply fails to understand the query, the BOT should reply with a graceful message like – “I am sorry, I could not understand what you just said. Please try again with a valid input.” In scenarios, where the BOT expects some input like a zip code from the user and the user repeatedly feeds the BOT with invalid values, the BOT should be made to wait for a fixed number of invalid trials from the user after which the BOT, instead of saying - “I am sorry, I could not understand what you just said. Please try again with a valid input.” should reply with a response directing the user to start from the beginning or help the user to break out of the loop and start afresh.If the user fed the BOT with junk/special characters as input, the BOT should fail gracefully and reply with a user-friendly response.

In scenarios, where the BOT expects some input like a zip code from the user and the user repeatedly feeds the BOT with invalid values, the BOT should be made to wait for a fixed number of invalid trials from the user after which the BOT, instead of saying - “I am sorry, I could not understand what you just said. Please try again with a valid input.” should reply with a response directing the user to start from the beginning or help the user to break out of the loop and start afresh.If the user fed the BOT with junk/special characters as input, the BOT should fail gracefully and reply with a user-friendly response. - BOT should not get affected by typos in the query:

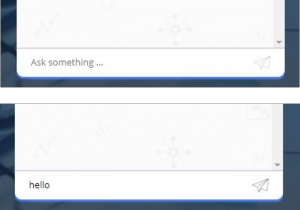

The BOT should be intelligent enough to understand the query even if the query includes spelling mistakes. So, if the user intended to say “California” and instead typed “Calafornia”, the BOT should not get stumped to not be able to interpret the query. Rather, such typos do happen often and the BOT should handle such situations by smartly understanding what the user actually meant and provide the same accurate response without failing.So, the tester should purposefully try out variants of common keywords in the query by altering their spellings and then observe if the BOT is able to understand and comprehend the user’s intention behind the query. - BOT’s GUI should be user-friendly and easy to use:It is the responsibility of the tester to make valuable suggestions regarding the various GUI features that the BOT should have.Like, for example, the “Send” button should have a tool tip “Send” and also this button should have an easily identifiable icon as part of it. Further, the “Send” button should be disabled by default and only get enabled when any valid text is typed in the text field.

Moreover, the user should be able to retrieve, edit and resubmit a previous query, if he wanted to, by pressing the “Up Arrow” key on the keyboard.When a response is being retrieved, an appropriate and space saving process indicator should be shown to the user.When the BOT has to give the user a list of options to choose from, these options can be presented in the form of suggestion chips (hyperlinked clickable areas with a description on them), which when clicked upon, would give the necessary information regarding the respective option.

Moreover, the user should be able to retrieve, edit and resubmit a previous query, if he wanted to, by pressing the “Up Arrow” key on the keyboard.When a response is being retrieved, an appropriate and space saving process indicator should be shown to the user.When the BOT has to give the user a list of options to choose from, these options can be presented in the form of suggestion chips (hyperlinked clickable areas with a description on them), which when clicked upon, would give the necessary information regarding the respective option. - Finally, some common but important pitfalls to look out while testing a Chatbot:

- When showing suggestion chips as part of a query's response, ensure that the suggestion chips are relevant and consistent with the parent query’s context and also the query’s response’s context.

- Make sure that the displayed suggestion chip when clicked upon, does not give the same information as its parent query.

- Make sure that the suggestion chips when clicked upon show the relevant and accurate information.

- Make sure that the BOT does not go into an indefinite process and fails to retrieve any information, by clicking on any suggestion chip or on firing any query.

- Do not show too many suggestion chips as part of a query's response. A maximum of five suggestion chips would be ideal and acceptable.

- Keep the text shown on the suggestion chip crisp, brief, concise and to the point.

- If carousels are displayed, make sure that the information on the carousel is easy to read and does not appear distorted.

- For BOTs that are meant to work on Google Assistant, ensure that the user is always exited from the application on typing or voicing the words like "exit" or "cancel".

- Always keep a keen eye on the functionality of the application and ensure that the BOT is tested thoroughly for its functionality. For example, if the BOT has to retrieve some information based on some inputs, make sure that the BOT retrieves that information, if available, for all valid permutations and combinations for those input values.

- For queries the BOT does not understand, after each failed attempt for the same query, make sure that the BOT repeats the same question in a different way.

- Make sure that the BOT does not crash at any stage. Especially look out for crashes for which the BOT does not restart even on trying to invoke it again.

- Keep a keen eye on utterance issues wherein the BOT introduce spaces or converts the intended spoken word to some other text, which the “voice to text” conversion component, has been trained to interpret as.

- Always ensure that there are validations implemented for all input values like numeric or email formats.

- Wherever the BOT accepts input parameter values in a sequence, ensure that the BOT never ever replaces or substitutes a parameter's input value as a part of another parameter's value. These issues are often known to occur and there are fixes for these kinds of bugs, which the tester has to convey to the developers.

- For ensuring test coverage, try to identify all those queries, for which there are no intents and the BOT fails to retrieve a valid response, when it ideally should have.

- Try to find as many scenarios wherein the BOT gives a different response to a query because the fired query was being wrongly mapped to a different intent.

These are some of the common scenarios in Chatbot testing that a tester must consider. Read the first part of the Chatbot Testing: Getting it right the first time - Part 1 blog for some more test scenarios.

- Chatbot should be able to easily hop from one intent to another:

View Previous Blog

View Previous Blog