Client Background

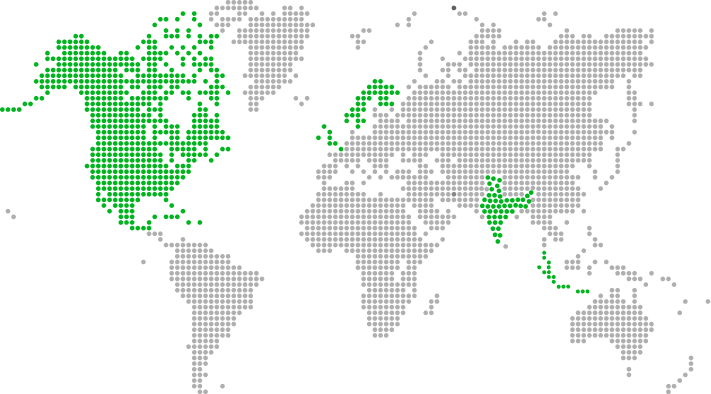

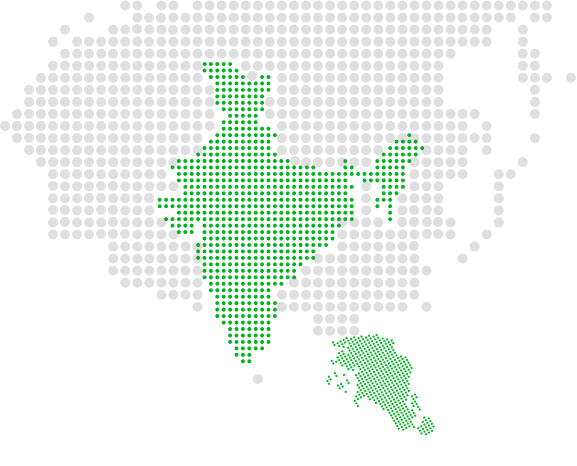

Our FinTech client handles payments for their clients, vendors, and online advertising partners. This business was recently acquired by the parent company and the client wanted to undergo migration from AWS to Azure to standardize their cloud resources to align with new owner’s Microsoft platform. One of the key activities required migration of the data consumption script from AWS to Azure. Based on deep experience with both AWS and Azure, the client engaged Xoriant to strategize and test the migration, provide knowledge transfer and ensure business continuity.

The key objectives of this migration included:

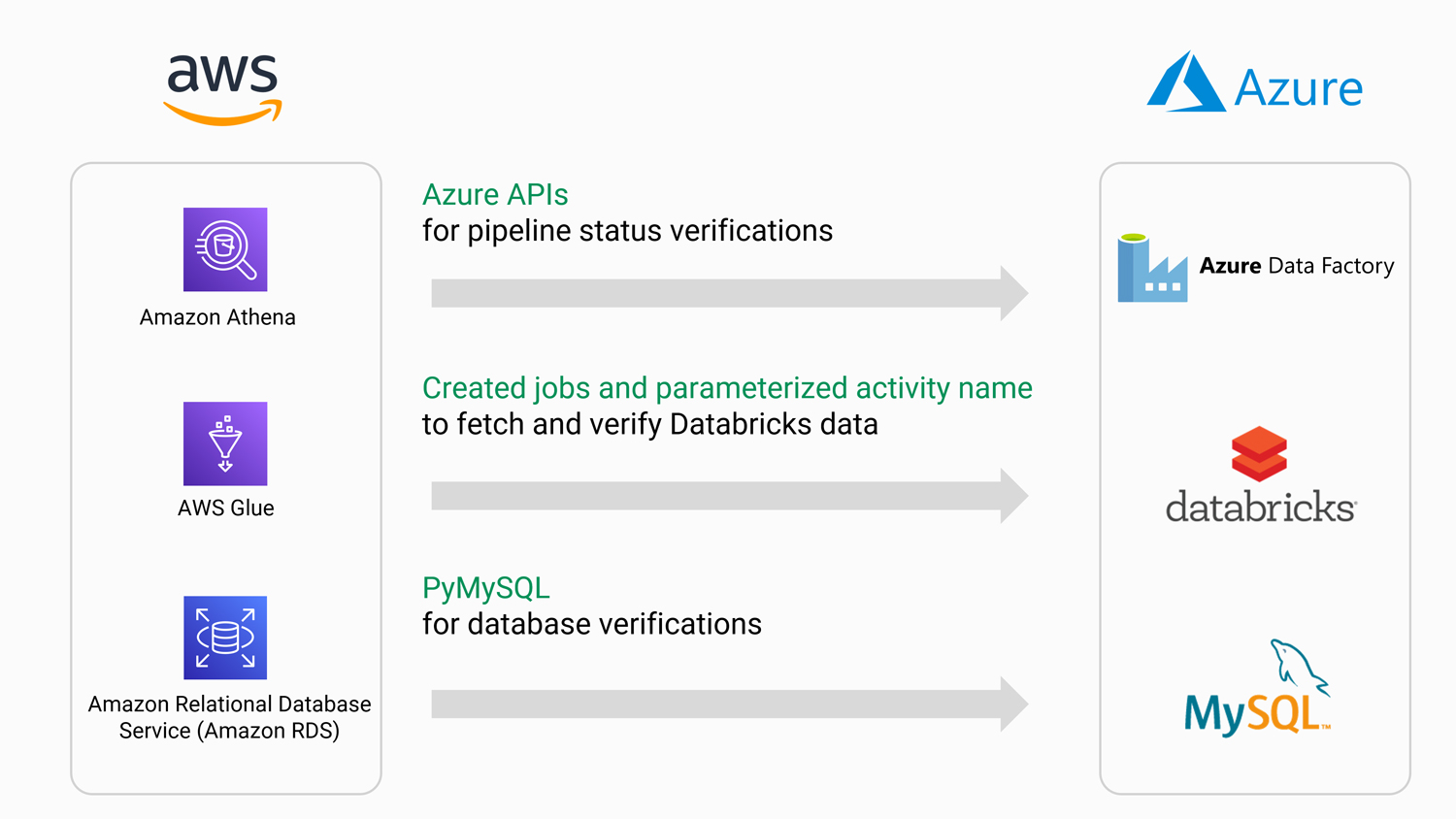

- Migrate data consumption script of the services based in Glue, Athena, and RDS (AWS) to Data Factory, Data Bricks, and My SQL DB (Azure).

- Verify the implementation status of cloud services after data consumption script migration.

Xoriant Solution | Key Contributions

Xoriant’s experts performed AWS and Azure cloud service analysis during migration of the data consumption script. We reviewed AWS automation code and created strategies for automating data migration to Azure.

Key components of strategy included:

- Verified how data consumption works differently in Azure as compared to AWS:

There were various Glue Jobs in AWS which mandated data validation via Athena Queries. In Azure, these jobs were converted to activities and grouped into one Data Factory pipeline. Each activity in the Data Factory pipeline is linked with a PySpark job stored in Databricks workspace. - Fetched Data from Databricks:

Since fetching data directly from Databricks is not allowed in Azure, we created a Databricks job and ran it with the activity name as the entity fetching the respective data. - Identified a scripting strategy:

AWS SDK with boto3 library in Python was used to validate Glue Jobs, Athena Scripts and RDS. To achieve similar implementation in Azure, Azure APIs were used instead of Azure SDK for pipeline status verification. This enabled a faster process with fewer errors. - Reused DB Script:

We reused code for all DB validations from AWS for Azure Data Ingestion during QA automation, which prevented code duplication. - Verified Database Migration Data:

PyMySQL was used for database data verification. Reused 100% Automation script for database migration from Amazon RDS to Azure MySQL.

High Level Project Diagram

Key Benefits

- Ensured successful testing with an end-to-end data consumption script migration strategy and phase-wise implementation.

- Ensured alignment with Azure Databricks design by creating a “helper” Databricks job and running with activity title as parameter.

Technology Stack

Python | PyTest BDD | PyMySQL | Amazon Web Services (Athena, Glue, RDS) | Azure (Data Factory, Databricks, MySQL) | Azure APIs

View Previous CS

View Previous CS