According to International Data Corporation (IDC), an average of more than half of the Global 2000 will see a third of their digital services transactions flow through their open API ecosystems by 2021. For Independent software and hardware vendors (ISVs & IHVs) the situation is no different and outlines a clear direction. Ecosystem expansion is the way forward. In today’s era of data-driven business, the rigidity of legacy IT infrastructure ecosystem and cloud ecosystem cannot meet the customer’s expectations. Even integrated systems encounter numerous challenges trying to achieve the daily goals of business deliverables.

In recent years, we have also seen the rapid emergence of multi-vendor implementations with individual infrastructures. This heterogeneity in enterprise infrastructure and large-scale systems integration methods reduces flexibility in terms of negotiation power, reaction to price increases, and freedom to change service providers. Consequently, most customers are unaware of proprietary standards that inhibit interoperability and portability of applications when taking services from vendors.

Leveraging a few approaches mentioned, interoperability and portability is the solution to boost your infrastructure product’s value and prepare a future-ready ecosystem for your business. In this blog, we aim to give you value indicators to build a successful infrastructure ecosystem expansion by virtue of standardized open API, composable infrastructure, microservice architecture, software-defined approach, interoperability, and portability.

Understanding the need for Ecosystem Expansion

Ecosystem expansion is the cornerstone required for the growth of future-ready infrastructure products. Enterprises are quickly needed to expand infrastructure not only with respect to software and hardware but also performance, scalability, security, monitoring, customization, and optimization to achieve desirable results.

Also, despite successful integration, both legacy and cloud infrastructures do not ensure optimal performance for storage, servers, software, and network. The infrastructure needs to be constantly monitored for likely errors in software and hardware performance. Hence, moving only to modern technology is not going to ease your customer’s business. Thinking beyond the hardware and software is most indispensable at the moment.

Now is the right time to think of Ecosystem Expansion – Why?

- Optimize the existing ecosystem to leverage system software, automate processes, adopt cloud-based services, and platforms expansion. At present, rigid traditional gear such as dedicated servers, storage arrays, and network switches sometimes lack key features needed to support modernization, adding to complexity instead of eliminating it.

- Capitalize on shifts towards new virtualization technologies as many businesses will adopt technologies such as desktop virtualization, software-defined networking and storage, and cloud-based virtualization.

- Accomplish optimal agility, simplicity, and reduced costs as companies need to handle workload more efficiently. Efficiency has become a top-of-mind priority as we are also navigating through the COVID-19 crisis.

- Boost productivity by accommodating different interoperable workflows, preferred tools, and seamless integration of heterogeneous environments.

- Breakdown data silos that cause poor performance and scalability chaos. Instead, achieve consistently high customer satisfaction with respect to stability & reliability.

How to benefit from the Ecosystem Expansion approach?

Let us look at how to gain business value from ecosystem expansion by adopting pragmatic approaches and implementing a few techniques essentially.

1.Adopting standards-based platforms with Open APIs:

In multi-vendor infrastructures scenario proprietary protocols, logging mechanisms and management interfaces are major roadblocks and an administrative nightmare. Customers can avoid these closed systems by leveraging standards-based mechanisms.

Moreover, IT administrators began seeking ways that data could be pulled out of infrastructure equipment, then manipulated and/or analyzed data for the purpose of making intelligent decisions. Again, many infrastructure vendors used proprietary data collection processes that kept data access and analysis inside a closed system. Thus, infrastructure operators should opt for an API-first approach to achieve flexible and scalable end-to-end interoperability for converged, hybrid IT.

Below are the examples of standard API for modern IT:

- Redfish API (HTTP, REST, JSON) to enable management of compute, network, storage and facilitate equipment using the simple interface

- System Management BIOS (SMBIOS) standard for delivering management information via system firmware

2. Adopting composable infrastructure approach

Composable infrastructure is when compute, storage, and networking resources are decoupled from the hardware they reside on (whether that is CPUs, storage disks, or memory devices) and are made available to applications that are running on any node in a data center. It is an approach that enables enterprise and on-premises data centers to better resemble the public cloud’s resource availability and ease of use. A selling point of composable infrastructure is that it reduces overprovisioning and keeps resources from sitting idle in forgotten, siloed servers.

Composable infrastructure relies on intelligent code allowing administrators to easily determine which resources are available and whether the hardware is configured appropriately. Furthermore, composable systems use automatic diagnostics to determine where hardware issues exist and provide an easier way to manage your data center. This level of self-orchestration can dramatically cut back on the need for human intervention. This, in turn, improves productivity and reduces the rate of human error.

Benefits of composable infrastructure:

- API-driven IT as infrastructure- written as code

- Workload-centricity

- On-demand availability of necessary resources

- Transfer of essential fabric resources into shared pools

- Combines elements of both Converged Infrastructure (CI) and Hyperconverged Infrastructure (HCI)

- Able to interact with bare metal servers, Virtual Machines (VMs), and containers

3. Adopting microservice architecture

Legacy systems were often built without modularity, meaning they do not include the code-sharing of modern systems and thus, require changing many blocks of code, instead of just one, to make an alteration. The vendor who sold you your system may no longer support it, or even exist, which makes patching the system much more difficult. As staff who implemented the legacy system leave or retire, you need to train newcomers to use the system.

Business applications are evolving from a rigid monolithic architecture to agile microservice-based architecture networking, and storage vendors can start developing plug-ins for containers to address tasks/jobs namely,

- Lift and shift – when legacy application is migrated to container

- Refactoring legacy application – repurposing monolithic code into microservice-based operations

- Greenfield deployment – Newly written code for new fresh deployment

Developing data management software and data protection software with microservices architecture is advantageous to customers for migration towards the cloud journey.

4. Adopting software-defined approach for optimization

Software-defined approach is an optimized representation of all the “abstracted” resources including computing, storage, and networking in a software form. It eliminates issues in managing infrastructure by many admins and the use of proprietary hardware. It also helps to achieve on-demand scalability, performance, availability, and easier upgrades of infrastructure software and hardware using a single pane of GUI.

Moreover, software-defined approach namely VMware Cloud Foundation (VCF) helps to accommodate technologies such as Hyperconverged Infrastructure (HCI) called as VMware vSAN and network virtualization software like VMware NSX-T. With the software-defined approach, you can expand your on-premises data center to any public cloud. You can even start your journey towards hybrid cloud or multi-cloud environment with integrated security for your containerized apps, and microservices networking for Kubernetes, micro-segmentation, automation, and orchestration.

This approach also helps to enhance your End-user Computing (EUC) experience or Virtual Desktop Infrastructure (VDI) environment especially in healthcare, research, and development centers where digital workspaces are application-driven.

Software-defined technologies like SD-WAN, due to their virtualized overlay nature, bring in benefits like simplified management, better network visibility, reduced cost, and less vendor lock-in.

5. Adopting integrity, interoperability, and portability

To enable ecosystem expansion, adopting interoperability and portability should be a top priority for your business. Doing so will enhance the systems’ ability to work efficiently and effectively across various software or hardware platforms.

Ecosystem expansion is emerging as an essential element in enterprise infrastructure strategy as companies seek to mitigate vendor lock-ins, ensure business continuity, and navigate through fluctuating workloads. At its core, interoperability requires shared processes, APIs, software-based adapters, and a policy-driven approach across various vendor platforms to enable communication between an application and software-hardware components.

Developing plug-ins or adopting published software adapters act as a bridge between different resources – compute, storage arrays, networking components, and operating systems by offering the enterprise a necessary toolkit to port and interoperate systems. For instance, providing virtualized SAP HANA adapters for legacy systems for VMware platform. These adapters provide significant cost savings for clients who otherwise would need to secure additional data center resources to support the modern SAP systems. It also allows administrators to extend the connectivity capabilities in various operating system environments with access to storage and compute management functionality to automate various tasks.

Companies leveraging NAS storage, hyperconverged, converged, or virtualized infrastructures can also rely on interoperability and portability provided for site recoveries and Disaster Recovery (DR) planning. They can do so by replicating the data with seamless integration of software adapters and plug-ins in infrastructure.

Third-party data protection software can also be made a part of this integration. The software can facilitate a common policy framework within ecosystem by developing either host- or storage-based replication to backup files to on-premises or public cloud. This action can be performed to meet requirements for high availability, business continuity, and long-distance disaster recovery for critical applications and data.

Moreover, you can achieve interoperability by adding automated workflow, developing web service as storage, or network connector service to an orchestrator. Doing so, you can provision storage for datastore, provisioning databases, or backup. Thus, you’ll be able to enable network equipment provisioning and new networking services to accelerate deployment of compute infrastructure resources.

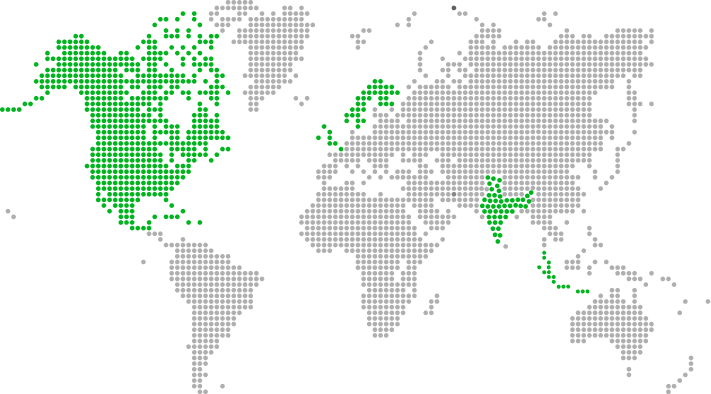

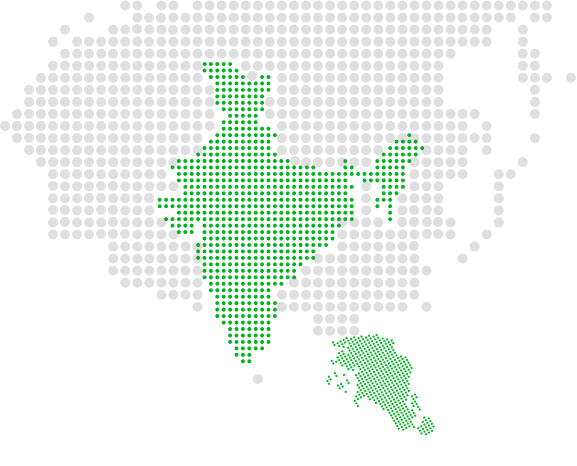

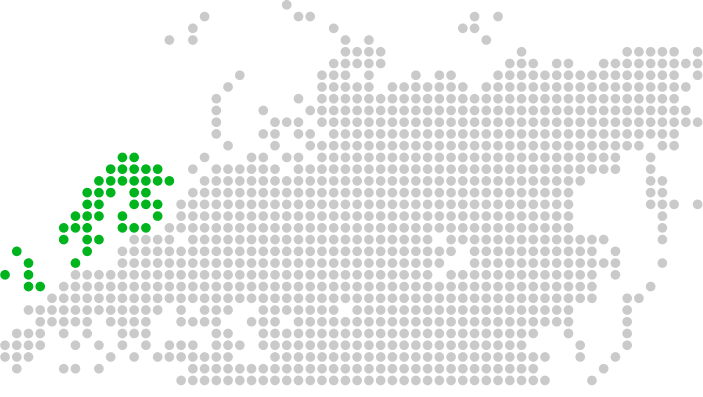

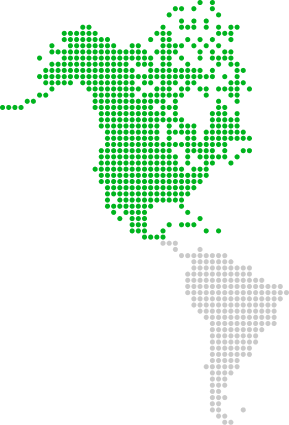

Xoriant can support with technical expertise

Technical challenges and disruption risks are likely to emerge during ecosystem expansion. Xoriant supported a leading hyperconverged infrastructure provider’s expansion journey and helped the client to gain an increased market share in the data center ecosystem.

Attend our webinar for listening to ecosystem insights by guest speaker Pankaj Sinha, Engineering Director, Nutanix, and to check out real-world examples on how ecosystem expansion can maximize the value of your infrastructure products.

Connect with our Ecosystem Experts.

References:

https://www.idc.com/research/viewtoc.jsp?containerId=US43171317

https://www.vmware.com/in/solutions/software-defined-datacenter.html

https://www.vmware.com/in/products/vsan.html

View Previous Blog

View Previous Blog